System Requirements:

- IPMI / BMC equipped hardware

- Sideband or Dedicated IPMI interfaces

The Problem:

I have recently been saved (and hindered) by having to pull the less often used IPMI tool from the proverbial administrators utility belt. In doing so I found a couple of design issues that you might like to consider if you are starting out with this technology.

More Info

These are a couple of design gotcha’s to keep in mind.

IPMI Physical & Logical Network Design

If you want to implement IPMI what should and should you not be doing? In looking around and thinking about it, this seems to be a poorly documented topic so I wanted to write up some thoughts on the subject.

Physical: Sideband or Dedicated Port?

Sadly this is always a choice, as your hardware will likely be one or the other. Some Dell hardware is equipped with the option to use the dedicated port or a logical MAC addresses presented through one of the motherboard integrated NICs (the so called LOM interfaces or LAN on Motherboard interfaces which are sometimes referred to as Sideband IPMI interfaces).

With a sideband interface, the physical NIC(s) is allocated a second Ethernet MAC address in software through which the IPMI controller listens for traffic destined for the IPMI controller. By definition there has to be a penalty for doing this, even if it is extremely minor as the NIC must check which MAC address the inbound packet is destined for and redirect it accordingly. Most of the time traffic will be destined for the production network rather than the IPMI virtual interface and thus the processing overhead is being performed needlessly.

In contrast the use of the Sideband interface removes 1 cable from your rack and one used switch port or, to scale that up in the case of a 50u rack, 5 cables requiring 50 switch ports which just needed an extra 2+ switches to receive all of the extra ethernet cabling. It is for this reason that sideband ports are a viable option. Some IPMI implementation also allow you to change the physical LOM port in software while others are fixed.

Both therefore have their uses although in general terms I prefer the use of dedicated management channels as the means there is less to potentially go wrong with production systems or accidental cable pulls of the IPMI Sideband LOM port as you can colour code your cabling accordingly to represent the fact that “blue equals IPMI/ILO/iDRAC etc”.

You do not always have the luxury of choice however. Servers such as the Dell PowerEdge 1950 that do not have an iDRAC card have no dedicated port option and the software LOM is fixed on LOM Port #1. Consequently I posit the following advice:

- If you need to NIC Team (e.g. LCAP) across the Motherboard NIC + any other expansion NIC, do not include the IPMI sideband port’s VLAN in the team otherwise you may find that IPMI traffic never finds its way up the correct bit of copper!

- If you need to team the LOM (and only 2+ ports on the LOM with no expansion cards included) check to see if this is supported by the server vendor. If there is only a single LOM port without support for teaming on your hardware you will encounter the same problem as outlined above; IPMI commands may simply get lost as they never make it to the correct port *.

- If you are not going to be using NIC Teaming, set the IPMI sideband port to be the port that services your management network, not your iSCSI or production networks. This allows you to reconfigure the NIC without impacting production services, make the IPMI NIC a member of the management network directly, make it a member of an alias management network for IPMI traffic or assign it as part of a VLAN that splits IPMI and non-IPMI management traffic.

- Unless you have a rack/switch density issue or do not have the budget for dedicated switches to support IPMI I recommend using the dedicated port option on your hardware if available — lets be honest, a dedicated IPMI switch even in here in 2015 can safely be a 10/100 Fast Ethernet switch, you do not need 10GB hardware to issue the occasional 2 byte PSU reset command. This is especially true when you realise that Serial Over LAN (SOL) is only itself operating at 9600bps up to 115200bps to provide you with serial console access via the IPMI system.

* I believe that Dell 9th Generation + servers all support NIC Teaming on the LOM, thus 8th Generation and lower will have issues with NIC Teaming. The solution implemented by Dell to support this is that all LOM NICs listen for IPMI traffic while only the named IPMI port sends IPMI response and Event Trap data. Dell firmware refers to this as “Shared Mode” while “Failover Mode” simply adds the option to dynamically change the IPMI Tx channel to a different live port in the event that NIC 1 fails.

Source: Dell OpenManage Baseboard Management Controller Utilities User’s Guide

Logical: Isolation/No-Isolation

If you actually look into IPMI as a technology, it is fair I think to say that it is not the most secure, particularly if you don’t spend time setting up manual encryption keys on each of your servers. So what can/should you do with traffic?

Firstly it depends on how complicated you want it to be/need it to be and secondly it depends on whether you have managed switches or not. If you do NOT have managed switches, then the best that you can muster is one of the following three options:

- Assign address allocations to the IPMI interfaces inside your production LAN (Hint: Do Not Do This)

- If you are not willing to have dedicated switches or sacrifice a physical server NIC port as a dedicated IPMI port: Assign address allocations to the IPMI interfaces on the servers and access them as an overlay network using IP Alias Addresses (see below for more details)

- If you are willing to have dedicated switches or sacrifice a physical server NIC port as a dedicated IPMI port: Isolate your IPMI traffic in hardware

If you do have managed switches then in addition to the above (not recommended) three options you also have the following two options:

Add the IPMI interface to your existing single management network. You have one, right? You know, the network that you perform Windows Updates off of, perform administrative file transfers across, have hooked up to change, configuration, asset and remote management tools and through which you perform remote administration of your servers from the one and only client ethernet port that connects directly to your management workstation. Yep, that management network! The advantage of this is that you already have the VLAN, you likely already have DHCP services on it (it is your call on whether you want to hunt around in the DHCP allocation list to find the specific allocation when you need to rescue a server in an emergency) and it is already reasonably secure.

The disadvantages of using the existing VLAN are that you may want to use encryption on the management network and there may be packet sniffers involved as part of the day to day management of the network. IPMI is not going to fit in nicely with your enterprise policy and encryption software e.g. IPSec. The inherent insecurity of IPMI (particularly before version 2.0) means that exposing the ability to power off systems to anything sniffing the management network may not be ideal given the damage that could be caused. Thus, good design suggests that your IPMI, ILO and DRAC traffic should be further isolated into their own VLAN, separate from the main production network (that should go without saying) and separate again from the main management network.

Which way you choose to go down is a design time decision based upon the needs of your own environment. Personally if given a choice, I prefer to cable the management and IPMI/ILO/iDRAC networks using different colours on dedicated ports to mitigate against disconnection accidents and in turn isolate them into their own VLAN with a minimum number of intersection points – usually just a management VM or workstation.

The footprint for something to go wrong is significantly reduced under this model as is the need for you to meticulously configure security and keep firmware patched (it isn’t a reason not to do it mind!). As an abstraction you could also think of it in terms of a firmware management VLAN (IPMI) and a software management VLAN (Windows/Linux management tools) or a day to day management VLAN and your ‘get out of jail free’ VLAN.

Accessing the servers IPMI interface using an Alias Address

When you are starting out with IPMI, or for more simplistic configurations and are using a Sideband port (where the IPMI port is shared with an active, production network connection) you may want to connect to other IPMI devices directly from one of the servers that itself is an IPMI host. Alternatively you may have a single NIC management PC and you want to connect to the same isolated IPMI network without losing access to your main network.

You can avoid needing to sacrifice one of your network ports as a dedicated IPMI management port by making use of an Alias IP Address. The main advantage of this is that you do not have to make use of VLAN’s (which are not supported properly anyway under IPMI 1.5 or older) and thus do not require managed switch hardware.

An Alias address is simply the process of offering one or more additional IP addresses to an already addressed network adapter which do not necessarily have to be part of the same network subnet.

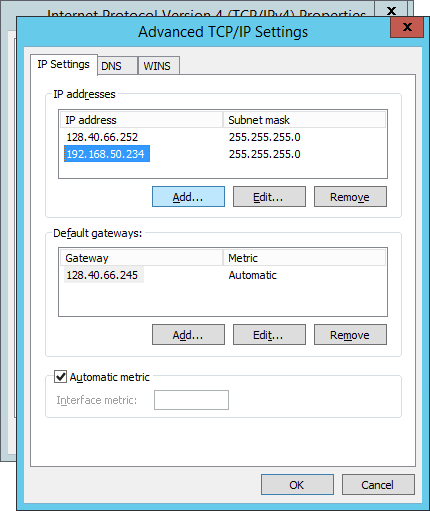

As you can see in the above screenshot, the servicing 128 address is complimented with a 192 address. This means that the network card can actively participate in both networks although the latter is limited to its own broadcast domain as it doesn’t have a gateway configured.

You do not need to alias any IPMI adapter to have the server participate in being a servicing IPMI client, the firmware does this once configured and that configuration is completely transparent to the Operating System. However if you need the server itself (or a management PC) to be able to connect to the IPMI overlay network without resorting to additional NIC’s, an alias address is a good way to get started without resorting to VLAN’s

To create an Alias

In Unix like operating systems you would issue

In Windows you can either use the Advanced TCP/IP Settings screen shown above or you can use the following to achieve the same result

Note: The alias address that you set on the adapter should not be the same as the one on the IPMI virtual firmware interface. It must be independent and unique on the IPMI subnet.

There is however a problem with this approach if you are attempting to access IPMI from an IPMI enable server; the server will not be able to see itself.

In testing ping, ipmitool and IPMI Viewer are unable to view the “localhost” servers interface. when using this type of configuration. This of course isn’t really too much of a problem because your management server is working as demonstrated by the fact that you are administering IPMI using it. However if you attempt to communicate with its own interface or keep a list of all hosts in IPMI Viewer, the host will simply appear as offline.

Using a Virtual Machine as an IMIP Management Console

The issue outlined above may seem trivial, however the reason that I have covered it is to record (highlight) the issue that I directly encountered with the seemingly sensible idea of having the management server as a virtual server hosted on a large node cluster. From a safety standpoint, having a multi-node cluster is a fairly safe place to keep your management tools while allowing easy sharing amongst admin team members without having to route vast amounts of VLAN’s into desktop machines (although I always keep one standby physical server bound to all management networks and excluded from public production and client network for such emergencies).

As an expansion on the issue outlined above there is an obvious pathway flaw in the logical network. If the physical server cannot access itself, then the hypervisor cannot access itself. Thus, when a management server node is running on the hypervisor stack, whichever node the management server is running on at the time will appear offline.

If the management server VM moves to a different hypervisor, then the offline hypervisor will change once ARP cache expiration time limitations have been taken into account — although the new host will appear offline immediately the now available one will not – in testing – appear until ARP has fully flushed.

Again, in a real world scenario this isn’t much of an issue: if you need to IPMI into a host, most of the time it is because that host is down. Thus, if the host is down then the Virtual Management server is not going to be running on it any more, it will have migrated to a new host and thus you will be able to see the downed server. Of course stranger things have happened!

The point of this article is to highlight what caused me a little head scratching and some incorrect troubleshooting where by I assumed that IPMI controller on a hypervisor had failed because it could not be raised from a management VM that (you guessed it) was running (it turned out) on the very same hypervisor. Once I had moved the VM and expired the ARP cache, the offline server changed to the new host node and the previously offline server popped back to life.

Notes on VLAN use

If you plan to use a VLAN to isolate the traffic onto your IPMI interface, obviously you need to ensure that your IPMI BMC is capable of filtering and in turn tagging VLAN traffic against its interface.

One thing to keep in mind is that Intel (particularly) have dropped a lot of driver update support for anything older than the I340 series with the release of Windows Server 2012 R2 (something that I learnt the hard way when I assumed that Windows Server 2012 ‘R1’ support would automatically equate to R2 support.

In practice this means that the Windows driver itself will not expose the Intel Device Manager extensions and thus you might find it difficult to tag the non-IMPI side of the shared interface onto the correct non-IPMI VLAN.

Remember that you will need to set the switch port to trunk mode and set the allowed VLAN ID’s for the port to both your production VLAN and your IPMI VLAN – or worse more if you are using the port as part of a virtualised converged fabric. Just remember that the ports that you will be able to send the IPMI VLAN down to are limited to one or a sub-set of all available NIC ports (depending upon the specification of your BMC).

Finally, you should be aware that NIC ‘link-up’ convergence can be noticeably slower when using VLAN trunk ports and tag isolation. This isn’t limited to just IPMI interfaces, but for the purposes of troubleshooting you should double the time that you allow to check that the port is in fact not working. For example, if your convergence time on a non-VLAN tagged access port is 8-10 pings you should wait for 16-20 pings while troubleshooting to allow for a VLAN to come up and register with both Windows and/or the IPMI BMC.

In real word situations, this means that Windows can be past the kernel initialisation process on a Dell PowerEdge before the IPMI interface is responding to traffic following a warm boot. The point of this: the use of VLAN tagging can lull you into a sense of insecurity in that you think that things are not working when all that is actually required is patience.